Responsible AI at the BBC

Like many media organizations, the BBC has been evaluating its position on rapidly advancing AI technologies. Over the past year, researchers Hannes Cools and Anna Schjøtt Hansen from the AI, Media & Democracy Lab at the University of Amsterdam have worked closely with the BBC's Responsible Innovation team. They studied how the BBC navigates the development and use of AI technologies such as large language models in two complementary projects.

The first project, ‘Towards Responsible Recommender Systems at BBC?’, examined how transparency is understood and applied across various teams and what challenges arise. It aimed to explore how the Machine Learning Engine Principles (MLEP) transparency principles can be operationalized across BBC teams.

The second project, ‘Exploring AI design processes and decisions as moments of responsible intervention’, focused on how responsible AI practices guided by the MLEP principles can be better integrated into AI system design within the BBC. This research followed the Personalisation Team and looked into how responsible decision-making unfolds during AI design processes.

Six months ago, at a Responsible AI symposium at the BBC Broadcasting House in London, the focus was on addressing industry challenges and establishing future research collaborations. Discussions revolved around how media organizations can go beyond superficial statements on transparency, human oversight, and privacy in AI development.

As the project draws to a close, we extend our gratitude to the BBC and BRAID teams for their collaboration. We look forward to more research partnerships in the future.

Read more here.

Vergelijkbaar >

Similar news items

May 29

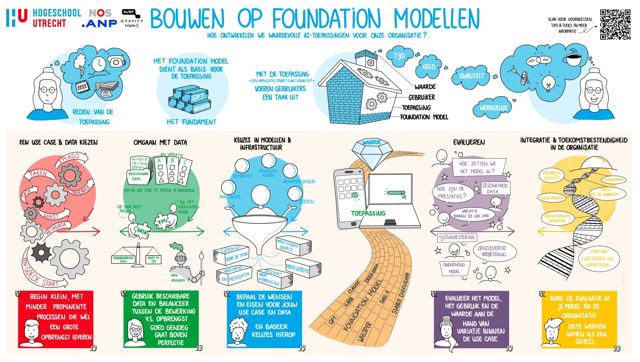

Building responsibly on foundation models: practical guide by Utrecht University of Applied Sciences and RAAIT

read more >

May 29

SER: Put people first in the implementation of AI at work

read more >

May 27

🌞 Open Space: AI meets Science Communication – will you take the stage?

read more >